5 Commonly Misinterpreted Customer Service Metrics

Table of contents

“Do you use a toothpaste that comes recommended by 8 out of 10 dentists?”

That’s what men in white lab coats sporting dazzling smiles would ask viewers while recommending a popular toothpaste brand in an early 2000s TV advert.

The viewers were within reason to interpret that 80% of accredited dentists preferred one toothpaste over another.

When the Advertising Standards Authority investigated this ad in 2007, it came to light that the surveyed dentists were asked a multiple choice question on how many toothpaste brands they’d recommend to consumers. The dentists gave more than one answer, including the brand’s competitors.

They were never asked to choose one over many.

While the incident taught us to watch out for misleading claims, it also called for questioning random statistics thrown at us without context.

Numbers don’t lie, but without contextual clarity, they aren’t beacons of truth either.

But let me be clear, this in no way suggests that the customer service tools you use are giving you inaccurate metrics. It just means that you must dig deeper to extract their data’s veritability and correctly analyze customer support metrics on your customer service software.

But, what does ‘correctly analyze’ entail?

Let’s find out by exploring how some of these customer support metrics are subject to misinterpretation.

Table of Contents

- Net Promoter Score (NPS)

- First Contact Resolution Rate (FCR)

- Customer Satisfaction Score (CSAT)

- Average Handling Time (AHT)

- Ticket Volume

- Don’t be wed to just one KPI

Net Promoter Score (NPS)

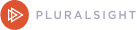

NPS is a measure to gauge customer loyalty. The score pivots around the question – “On a scale of 1 to 10, how likely are you to recommend our brand?”.

Accordingly, responders are grouped into promoters, passives, and detractors.

How can you misinterpret your Net Promoter Score?

Let’s say you’re a regular customer at your local burger joint. But it turns out you don’t visit them for their burgers. You’re in love with their pesto sandwiches instead.

So when someone asks you if you would recommend the restaurant, you’d probably tell them, “I’m not a big fan of their burgers, but their pesto sandwich is just chef’s kiss”.

Similarly, when an organization with many product verticals asks a user if they would recommend their brand to a friend or a colleague, her answer could be yes and no.

And that’s the fallacy of using a standalone metric like Net Promoter Score. It doesn’t tell you what aspect of the brand they’ll recommend or, worse, advice against.

We get the appeal of the metric’s simplicity. And companies sure do love hearing that their customers are loyal fans. But don’t be disappointed if the NPS doesn’t align with your business growth or explain your customer churn.

Remember to account for market conditions when you’re calculating the metric. Let’s say you’re selling insurance for pets – a segment that doesn’t see fierce competition unlike health or life insurance policies for humans.

Your customers might not recommend your service, but you might still see growth because of the lack of better alternatives available. You’ll continue to have their loyalty until more players explore the pet insurance market.

You may also misclassify consumers owing to the nature of the NPS scale. Here’s an instance that proves this argument.

Christina Stahlkopf and her team surveyed over 2000 consumers to rate three out of ten global brands (Twitter, Burger King, American Express, Gap, Uber, Netflix, Microsoft, Airbnb, Amazon, and Starbucks) that they used or bought. They received over 5000 brand ratings.

The respondents were asked the standard NPS question i.e. “Would you recommend this brand?“.

Her team’s goal was to find out if there was a difference in their intentions and actions.

So additionally, Christina and her team also asked the respondents, “Have you recommended this brand?” and “Have you discouraged anyone from choosing this brand?”.

Here’s what they found:

16% of the customers were classified as NPS detractors. As per the NPS scale, they rated their brands between 0 and 6, which means they were dissatisfied with the brand and would recommend others against using that brand.

But here’s the big reveal:

When Christina’s team asked the respondents the follow-up question, they found that only 4% actually discouraged the brand. That’s a 75% decrease in the brand’s negative sentiment.

Similarly, 50% of the customers were classified as promoters but 69% actually recommended the brand when asked the follow-up question. That’s a whopping 38% increase in positive sentiment.

We echo Christina’s sentiments when it comes to NPS. It’s a great tool if used as a compass to understand consumer sentiment. A compass won’t show you the actual path of where you’re headed but it will tell you that you’re more or less headed in the right direction.

First Contact Resolution Rate (FCR)

FCR is the percentage of customer requests resolved by a support agent in the first interaction with the customer.

The customer’s issue is resolved within their first call. Ideally, higher the FCR, higher the satisfaction rate. But does that always hold?

How can you misinterpret First Contact Resolution Rate?

For starters, the urge to resolve queries within the first call could lead to your customer support team compromising on support quality.

Let’s say you called your credit card company’s helpdesk number to discuss increasing your credit limit. The agent says this request must be made to another department and gives you their number.

Here comes the questionable bit about FCR – the agent marks your support ticket on the system as ‘Resolved‘ because you’re someone else’s problem now.

While it’s a quick fix to ensure high FCR rates, this wouldn’t necessarily translate to customer satisfaction scores.

Also, the agent might not be the ‘first contact’ for a customer’s query.

For instance, the customer would have probably tried to find the solution on your self-service portals like the website chatbot or your knowledge base, failing which they might have even tried communication channels like social media.

This would technically make the call center the ‘fourth contact’ touchpoint for his resolution. The agent may already be dealing with a dissatisfied customer at this point.

Customer Satisfaction Score (CSAT)

CSAT measures whether your customers are satisfied with your product, service, or customer support.

How are CSAT scores misleading?

For one, companies often rely on CSAT as a standalone metric to measure customer service. Maybe your CSAT score is high, but that doesn’t necessarily translate to customer satisfaction as a whole.

When you ask customers – “How satisfied are you with the service provided by our support team member?” – in your customer satisfaction surveys, they might respond with “Satisfied”.

Does that mean they’re happy customers? A single question like that doesn’t necessarily paint the complete picture.

If you have a high CSAT score, but your customer is put through high effort customer experiences like,

- Long wait times

- Multiple call transfers

- Having to repeat their issues

- Frustrating chatbots

- an FAQ page without a search function

then your CSAT scores are misleading. As a result, you might end up making poor business decisions.

You have to read your CSAT keeping in mind CES as an important metric. What does that mean?

To get the full context, you must also ask existing and new customers – “How easy was it to resolve your query?

This question essentially helps you calculate the Customer Effort Score. CES measures how much effort customers spend in getting the issue resolved. In essence it tracks the ease of the customer journey.

Average Handling Time (AHT)

AHT measures the total average duration of a single phone call. The time frame for AHT includes:

- The total talk time +

- The total hold time where the customer is put on hold during the call by the agent (usually to find answers to the query) +

- The post-call work time (like setting follow-up reminders).

How can Average Handling Time be a misleading metric?

Many CX leaders would agree that AHT is a terrible standalone metric to judge customer service performance, agent productivity and call quality.

Factors such as:

- Having a chatty customer

- Complexity of the issue

- Incentivizing shorter call times

pollute the metric.

A low AHT could mean your customer service agents treat a customer’s problem as a one-and-done ticket and may compromise on support quality.

A high average handle time, on the other hand, isn’t necessarily a bad thing in terms of support experience.

Let’s say your customer reaches out to one of your agents (let’s call her Emma) about his canceled flight. He needs to go to his daughter’s graduation ceremony on the day he booked the flight. So it’s futile if Emma offers to reschedule his flight the next day.

So she spends an extra 15-20 minutes of reply time looking for all possible options to find flights that would work for him. She might even take time to explore options combining flying by air and train or by air and cab.

Emma’s AHT would be way over the ideal benchmark, but the amount of time she spent to empathize with your customer would have possibly earned you not just a loyal customer but a brand advocate.

Ticket Volume

Ticket volume is the number of open tickets and pending queries in your customer support queue.

How do you misinterpret Ticket Volume data?

Low ticket volumes or zero backlogs don’t necessarily mean you’ve caught up on all your pending tickets. It could also mean that your customer support could be so bad that people just give up and move on to your alternatives instead.

91% of customers will silently abandon your brand without contacting your support team even once.

At the same time, more support requests aren’t necessarily a bad thing. What’s important is to find out why it is so.

It could very well be that your customer base is growing, where an increasing number of customers are interacting with your product for the first time and have basic queries.

The pandemic, for instance, recorded a steep spike in IT ticket calls.

77% of those tickets had VPN issues, 65% complained about the poor video-call quality, and 51% wanted to fix their poor wi-fi connection. Not because the service was necessarily bad, but because the pandemic led us to dramatically embrace digitization. This, in turn, led to an increasing number of customers signing up for the first time.

Don’t forget to consider the spikes in the total number of tickets when you’ve introduced a new feature or made changes to an existing one.

Don’t be wed to just one KPI

No one metric can serve as an anchor for business growth.

Even if your business outcomes align with the traditional reasoning and assumptions of the metrics, continue using multiple key performance indicators to always be on the lookout for intriguing deviations.